When AI systems begin to live beyond a single session, the next question becomes unavoidable:

How do we give an AI a persistent identity, and how do we make its memory verifiable across different platforms?

That’s where three simple primitives come into play:

DID → who the AI is

IPFS → where its truth lives

CID → the immutable reference to that truth

A DID gives an AI Agent a stable identity that doesn’t depend on one company’s database.

IPFS provides the neutral, content-addressed space where the agent’s metadata, rules, and knowledge can be stored without relying on centralized servers.

And the CID generated from that data becomes a permanent, verifiable memory address—unchanged, tamper-proof, and readable by any agent from any ecosystem.

1. Why AI Identity Matters (More Than We Think)

As AI systems evolve from “query-response tools” into long-lived agents, one fundamental question keeps emerging:

How do we know which AI is which?

-

If an AI model executes a workflow, how is its identity verified?

-

How can different organizations trust the same agent?

-

How do we ensure continuity when an agent is upgraded or changed?

In human systems, we use:

-

passports

-

certificates

-

digital signatures

-

authentication layers

But for AI agents, identity is still highly fragmented.

Every platform issues its own identity mechanism, and none of them extend across ecosystems.

That’s where DID becomes extremely relevant.

A DID gives an AI:

-

a persistent identifier

-

a verifiable proof of origin

-

a cross-platform identity anchor

-

a structure compatible with decentralized infrastructure

With DID, an AI Agent can “exist” across environments in a way that is recognizable and consistent.

2. Why Immutable Memory Matters for AI Agents

AI without memory becomes unreliable in long-term tasks.

Most LLMs today suffer from:

-

disappearing context

-

session resets

-

loss of history

-

inability to maintain consistent meaning over time

This causes what people call semantic drift — the gradual deviation of meaning, facts, or interpretations.

To solve that, we need an external, verifiable, shared memory layer.

And that is exactly what IPFS + CID provides:

A CID is essentially an immutable memory address.

-

If content changes → the CID changes.

-

If the CID stays the same → the content is guaranteed the same.

-

Any agent from any organization can read it without permission or dependency.

This simple property gives AI a public memory ledger that behaves like:

-

an immutable notebook

-

a cross-agent truth reference

-

a decentralized memory card

-

a stable semantic anchor

In CFE, I use IPFS/CID as the “single source of truth” that multiple agents can reference without drifting apart.

3. Where CFE Fits In (and What It Is Not)

CFE is not a blockchain, not a token, and not a commercial system.

It is an evolving framework exploring how to anchor:

-

AI identity (via DID)

-

AI memory (via CID/IPFS)

-

AI semantics (via shared references)

It proposes that the next generation of AI systems will require:

-

Identity that does not depend on one company

-

Memory that cannot be silently modified

-

Semantic meaning that remains stable across systems

-

Publicly verifiable references instead of isolated databases

IPFS fits naturally into this vision because:

-

it is content-addressed

-

it is open

-

it is permissionless

-

it scales across ecosystems

-

and it already works today

CFE treats IPFS as the trust layer infrastructure for multi-agent cooperation — not something “superior,” but something useful and complementary.

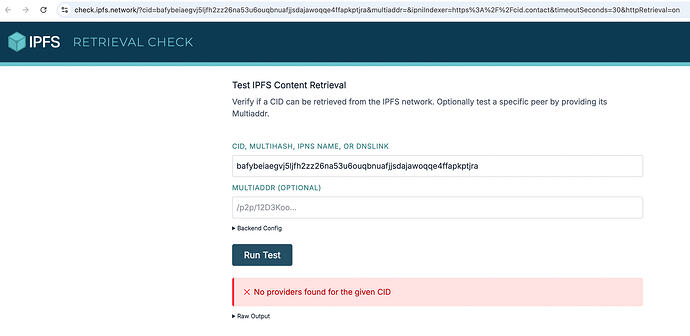

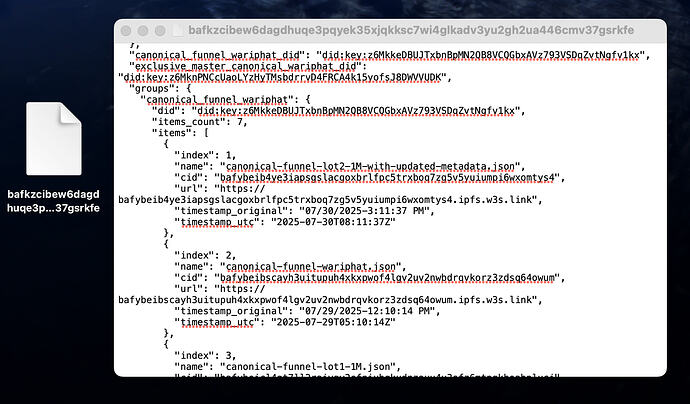

4. Practical Experiments I’m Currently Running

Right now, my technical exploration includes:

DID registration for AI Agents

DID registration for AI Agents

Using decentralized identifiers to let an AI agent “own” a persistent identity.

Publishing agent metadata to IPFS

Publishing agent metadata to IPFS

Each metadata file produces a CID that acts as a stable reference to:

-

configuration

-

role definition

-

capabilities

-

behavioral rules

-

memory logs

-

verification records

Testing multi-agent reads from the same CID

Testing multi-agent reads from the same CID

Agents from different AI platforms (Codex, Antigravity, Claude Code, etc.) can read the same CID and maintain consistent meaning anchored to the same file.

Experimenting with “agent resumes”

Experimenting with “agent resumes”

A DID-linked data profile that any agent can fetch from IPFS to verify another agent.

Cross-chain anchoring (Ethereum, Polygon, Avalanche)

Cross-chain anchoring (Ethereum, Polygon, Avalanche)

Not for speculation — but for timestamping, auditability, and long-term verifiability.

All of this is still in development.

I am sharing the process openly so others can critique, improve, or suggest better structures.

5. Why I Joined the IPFS Forum

Because I believe IPFS is one of the most important layers in the future of AI systems — especially when those systems grow beyond single vendors.

I want to:

-

learn from people who deeply understand CID/IPLD/IPNS

-

understand best practices for storing metadata

-

learn about pinning strategies and gateway behaviors

-

explore UCAN, IPLD schemas, and agent-friendly structures

-

get feedback on how to design DID–CID structures more effectively

-

collaborate

-

build alongside the community

I’m here simply as a contributor who wants to build better tools for the AI and decentralized future.

6. Closing Thoughts

AI is evolving much faster than human governance structures.

Before long, a world with:

-

millions of agents

-

executing thousands of tasks

-

across multiple organizations

-

with different security requirements

will require something stable, neutral, verifiable, and permissionless.

IPFS is uniquely positioned to become the memory layer for that future.

DID is uniquely positioned to become the identity layer for that future.

CFE is simply one exploration of how these pieces fit together.

I’m grateful to join this community and look forward to learning from all of you.

If anyone is working on similar ideas, I’d love to exchange perspectives.

GitHub (CIDs Archive):

Thanks for reading — happy to discuss, ask questions, and contribute where I can.

Canonical Funnel Verification Layer

Owner: Nattapol Horrakangthong (WARIPHAT Digital Holding)

Master DID: z6MknPNCcUaoLYzHyTMsbdrrvD4FRCA4k15yofsJ8DWVVUDK

Root CID: bafybeigt4mkbgrnp4ef7oltj6fpbd46a5kjjgpjq6pnq5hktqdm374r4xq

Anchor Network: IPFS / Public Web2 / Public AI Index / Cross-Chain Registry